Thing-onna-Stick

Custom electronics, LED lighting, Tasmanian Oak

2016-current

Thing-onna-Stick is available for order! As this is a bespoke device, please get in touch with me via email to learn more about having a Thing-onna-Stick customised for your home.

Twitter / X update: Twitter has significantly reduced its API capabilities since April 2023, and introduced exorbitant fees for the use of its filtered streaming service. This has major implications for creative practitioners and researchers. As a result, the backend processing that Thing-onna-Stick relies on is now being revamped to tap into a more diverse range of data streams. On the bright side, the sentiment analysis engine is getting an update to process multiple languages (further work on East Asian glyphs is underway), and to allow emoji to contribute to the overall sentiment score.

More information on the effective demise of Twitter >

The Thing-onna-stick is a slow data luminaire that derives its shifting patterns of colour from a geo-fenced Twitter feed, via a sentiment analysis algorithm that fuses Finn Årup Nielsen's AFINN-111 dataset and VADER (Valence Aware Dictionary for Sentiment Reasoning), both of which are lexicon-based sentiment analysis algorithms.

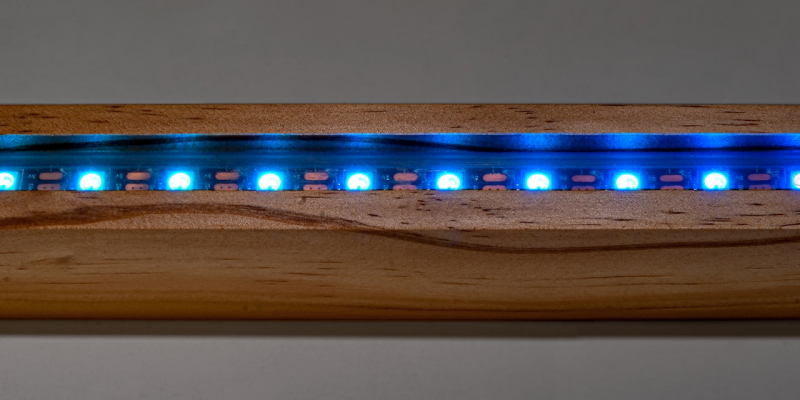

The electronic hardware is concealed within a Tasmanian oak post, with an RGB LED strip exposed on one side to allow the coloured light to either rake across a surface that it stands against, or to diffuse itself into the environment.

By transducing the sentiment scores into owner-customisable colours, the Thing-onna-stick is meant to allow further curation of one's desired data stream, and how it is to be presented depending on where the Thing-onna-stick is used.

Part of the rationale behind this work is also to acknowledge the biases that are inherent in the lexicons. Like the Happiness Index, the issues related to the authenticity of data processing algorithms are part of salient and pressing conversations we need to have as we engage with ever-deeper engagements with machine learning.